I am currently building robotic foundation models at Skild AI. I was previously advised by Somayeh Sojoudi as a PhD student at Berkeley EECS. My research interests span robust machine learning, safe reinforcement learning, and diffusion-based control.

spfrommer(at)skild(dot)ai

Selected research

S. Pfrommer*, Y. Bai*, T. Gautam, S. Sojoudi. Ranking Manipulation for Conversational Search Engines. EMNLP 2024, Oral.

Major search engine providers are rapidly incorporating Large Language Model (LLM)-generated content in response to user queries. These conversational search engines operate by loading retrieved website text into the LLM context for summarization and interpretation. This work finds that adversarial prompt injections can strongly impact the ranking order of sources referenced by conversational search engines.

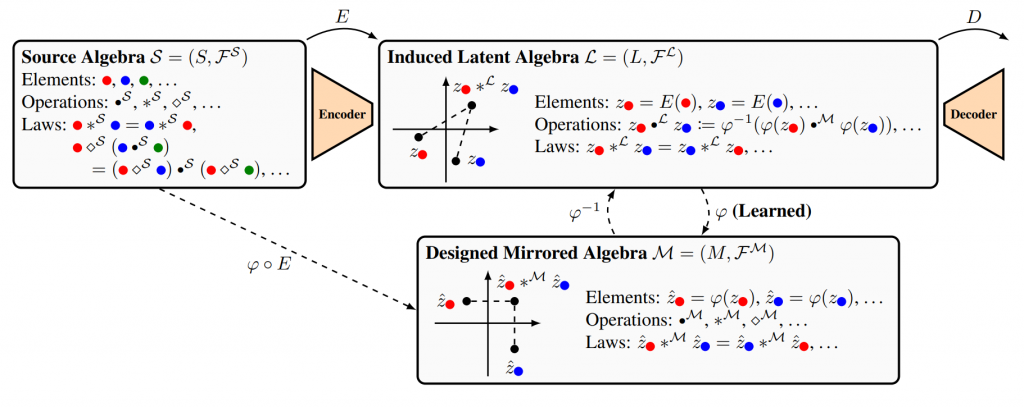

S. Pfrommer, B. Anderson, S. Sojoudi. Transport of Algebraic Structure to Latent Embeddings. ICML 2024, Spotlight.

In machine learning, it is common to produce latent embeddings of objects which live in algebraic spaces (e.g., sets, functions, and probability distributions). We present a principled approach for learning latent-space operations which correspond to operations on the underlying data-space algebra. Our approach constructs parameterizations which provably satisfy applicable algebraic laws such as commutativity, distributivity, and associativity.

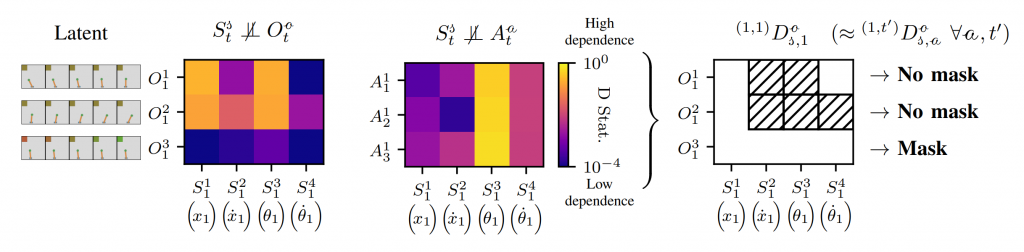

S. Pfrommer, Y. Bai, H. Lee, S. Sojoudi. Initial State Interventions for Deconfounded Imitation Learning. CDC 2023.

We address the causal confusion problem in imitation learning, wherein learned policies attend to features which are only spuriously correlated with expert actions. Our approach uses causal inference technique to mask spuriously correlated features without requiring expert querying, expert reward function knowedge, or causal graph specification.

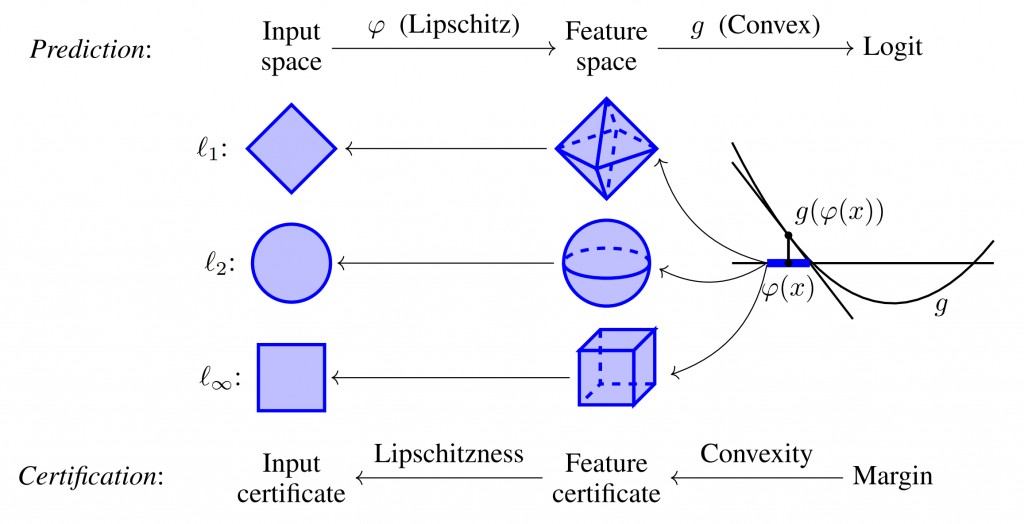

S. Pfrommer, B. Anderson, S. Sojoudi. Asymmetric Certified Robustness via Feature-Convex Neural Networks. NeurIPS 2023.

Certified robustness of classifiers has generally proven to be an intractable problem for all but the smallest of networks. We introduce a more focused asymmetric certified robustness setting, wherein an adversary is only attempting to induce false negatives. This work leverages input-convex neural networks to provide fast, closed-form certified radii for this real-world problem setting.

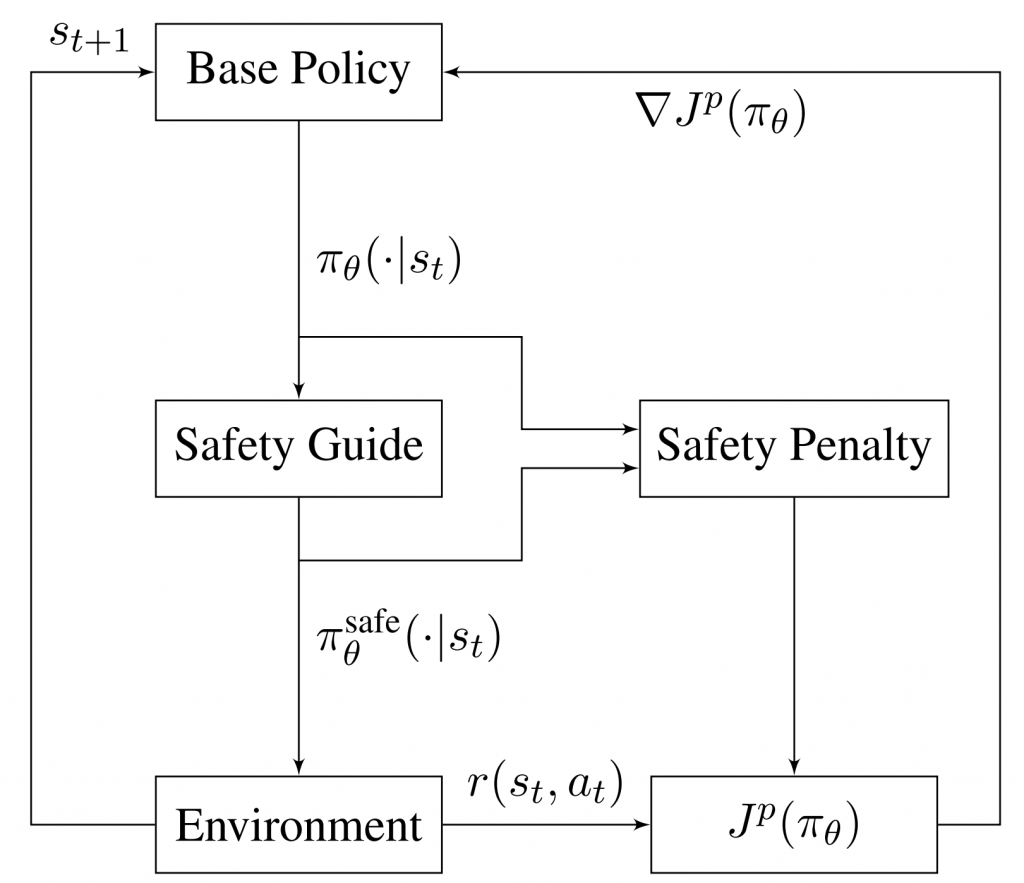

S. Pfrommer, T. Gautam, A. Zhou, S. Sojoudi. Safe Reinforcement Learning with Chance-constrained Model Predictive Control. L4DC 2022.

Reinforcement learning settings often require that agents obey user-specified safety constraints. We wrap a policy gradient learner with an MPC-based safety guide that ensures constraint satisfaction. Crucially, our policy gradient cost function includes a safety penalty term which results in a provably safe optimal base policy.

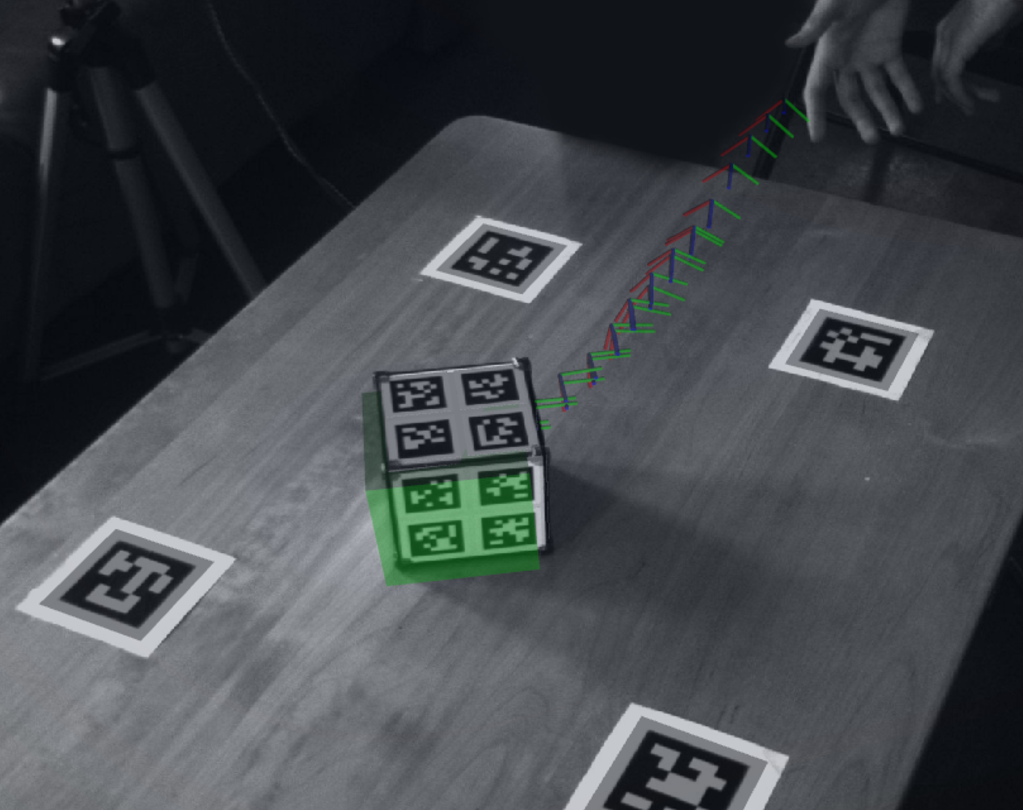

S. Pfrommer, M. Halm, M. Posa. ContactNets: Learning Discontinuous Contact Dynamics with Smooth, Implicit Representations. CoRL 2020.

Common methods for learning robot dynamics assume motion is continuous, causing unrealistic model predictions for systems undergoing discontinuous impact and stiction behavior. In this work, we resolve this conflict with a

smooth, implicit encoding of the structure inherent to contact-induced discontinuities. Our method can predict realistic impact, non-penetration, and stiction when trained on 60 seconds of real-world block tossing data.

Selected projects

TorchExplorer. TorchExplorer is a general-purpose tool to see what’s happening in your network—analagous to an oscilloscope in electronics. It interactively visualizes model structure and input/output/parameter histograms during training. It integrates with weights and biases and can also run locally.