Last month I had the pleasure of presenting my research on learning contact dynamics at the Conference on Robot Learning (2020), together with my coauthors Mathew Halm and Michael Posa in DAIR lab. The complete paper is available on arXiv, with a video demonstration of the methods here.

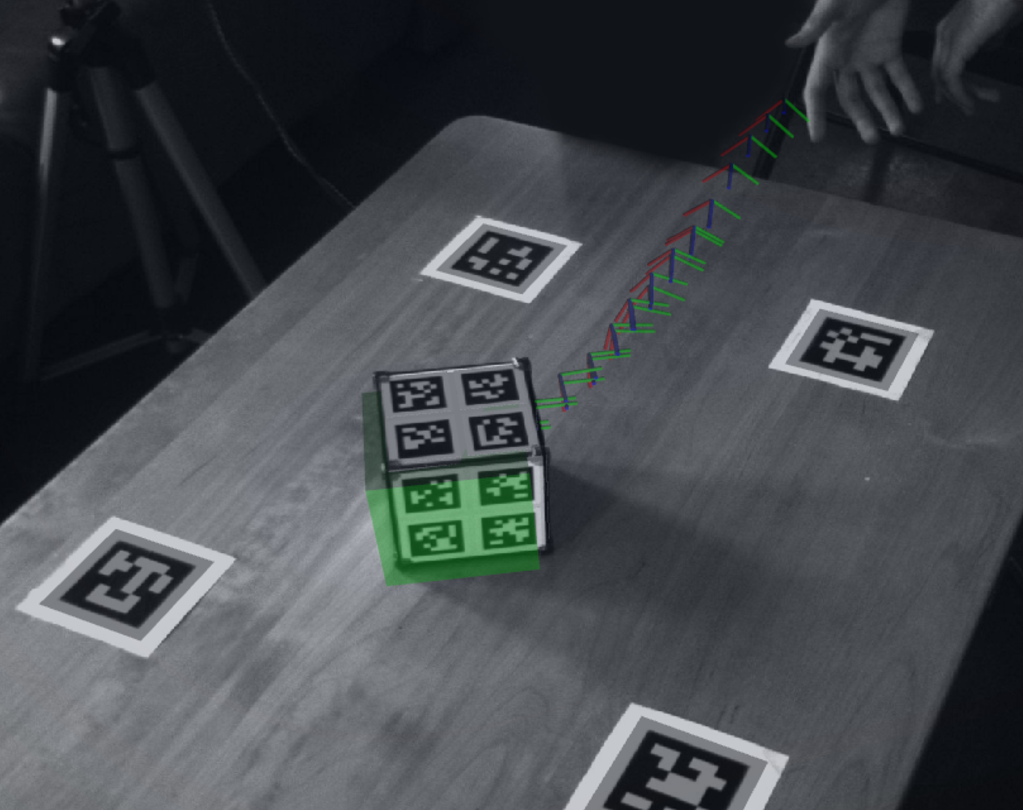

This work addresses a challenge common across robot manipulation and legged robotics: making and breaking contact. These situations are often tricky for robots since the dynamics are highly nonsmooth. Instead of differentiable motions, objects experience large, essentially instantaneous impulses at the moment of impact. Even during contact, stick-slip transitions are nonsmooth. Our goal was to learn these dynamics and evaluate our approach on a real-world 3d block tossing experiment.

Current methods do not fully address the fundamental nondifferentiability of the contact problem. A naively trained end-to-end method which attempts to predict forces as a function of state would be unable to accurately capture nonsmooth behavior since neural networks are inherently differentiable. This leads to unrealistic contact behavior such as ground penetration and a lack of stiction.

ContactNets addresses this challenge with a smooth parameterization of the contact problem. Instead of learning forces as a function of state, we learn a signed distance function between bodies as a function of state. This function is differentiable and therefore matches the inductive bias of neural networks. We perform a similar trick for learning tangential contact Jacobians for friction. For each time step, we formulate a loss function as a quadratic program that attempts to match the observed dynamics while also respective physical principles such as complementarity. Our learned signed distance function and tangential Jacobian are now in a form that can be simulated with most conventional methods such as Stewart-Trinkle. These simulators allow for our models to predict nonsmooth dynamics while learning a smooth parameterization.

For further details, please see the paper, and especially Appendix A.1 for a lengthier explanation of issues with end-to-end learning issues for contact.

Leave a Reply